Improving Docker Security: A Better Way to Secure Your Container Network

Using Linux containers like those enabled by Docker provides a perfect encapsulation method to package application components, or micro-services. Is there any need to worry about Docker security?

Some would argue that just the process of deploying applications as container based micro-services improves overall security and reduces the applications attack surface.

Let’s assume that everyone follows the Docker security best practice guide and

• Secures and hardens host machines,

• Scans all container images for vulnerabilities,

• Sets upper limits on resource usages such as memory, CPU, volume.

If application containers do not need talk to the outside world or other containers, it should be easy to run them in a relatively safe and isolated environment. Even if one container is bad, is hacked, or goes rogue, it won’t be able to impact or cause issues on other containers or applications.

One real example I heard about for this use case is where containers are used to run student programs. The results were just written to a file on a file server, and then the container will be destroyed after it’s done. In this situation, which is somewhat similar to a non-persistent virtual machine environment but much more lightweight, security is less of a concern. But for most real-world applications, especially complex business applications, containers do need talk to the outside world or to other containers frequently to perform their work tasks.

Containerization is changing the way applications are working internally and working together. When applications are split into micro-services or containers, their existing internal communication methods (like IPC, RPC…) become network based protocols like REST or HTTP requests etc. When a container is opening or publishing a port, the Docker daemon will add the IP table forwarding rules accordingly. Let’s take a look at what’s being added or modified in detail.

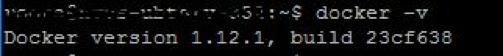

This is my Docker environment, it’s at the latest version v1.12:

This is the IP table before I load any containers:

As a simple and easy test, I’ll just load an nginx container and export its port 80 to outside world through host port 8080:

![]()

Now let’s check out the IP tables again:

So the Docker daemon has added rules that allows “anywhere” to talk to the container 172.17.0.2 on port 80 through host port 8080. This means that this port is widely open and accessible – it doesn’t matter if connections come from an internal network or an external network.

![]()

This is completely understandable because the Docker daemon doesn’t know the purpose of exporting to port 80. It could be for serving internal applications or it could be serving external requests. To get everything working with the least chance of creating networking problems, the Docker daemon will obviously just add simple rules to give access to anyone on port 80.

Looking at this from the user (developer, devops, security) point of view, is this really the way it should be? I think for testing it might be ok, but for production applications, probably not.

If for example I deploy an Nginx load balancer container, I may just use this as the entry point to handle any external web requests, or I may just use this container as my web application cluster’s internal load balancer. There’s not enough knowledge prior to deployment to know how an application will or should work before the applications are actually running. Of course, we could ask developers or devops to tell us, so that we could manually configure additional rules. The problem is that this is a tedious, error prone, often forgotten process that is often ignored.

“Is there any way discover application behavior and automatically set rules that don’t require manual editing as containers scale up or down, even on a cluster of hosts?”

In order to configure containers with better routing rules for better, more flexible security policies, we need run-time application knowledge. This is critical because application behavior is not static. Containers, when deployed as micro-services can be reused or have changing roles dynamically. The whole containerized environment is dynamic and should be made to scale quickly and move to different hosts dynamically. Static IP table rules just cannot be efficient and sufficient to pick up the changes in this hyper-dynamic environment.

NeuVector’s container security solution will be able to address this. NeuVector will learn the container behavior at run-time, figure out the best rules for containers during run-time and lock them down at run-time. No manual updating of IP tables is required, although customized policies can be added if desired.

While the Docker daemon or other container overlay networking is setting up basic layer 3 rules on IP tables or using internal routing tables, NeuVector will create protections all the way up to layer 7. So NeuVector is compatible and complementary to Docker security, and it provides for much more granular and precise security control for your application containers. The benefit is that policy creation is done in an automated way which scales and adapts to constantly changing container environments! This provides enhanced security with no manual configuration of static rules.

Related Articles

Jul 06th, 2023

NeuVector by SUSE release 5.2 is now available!

Jan 04th, 2023